The Archetype AI Blog

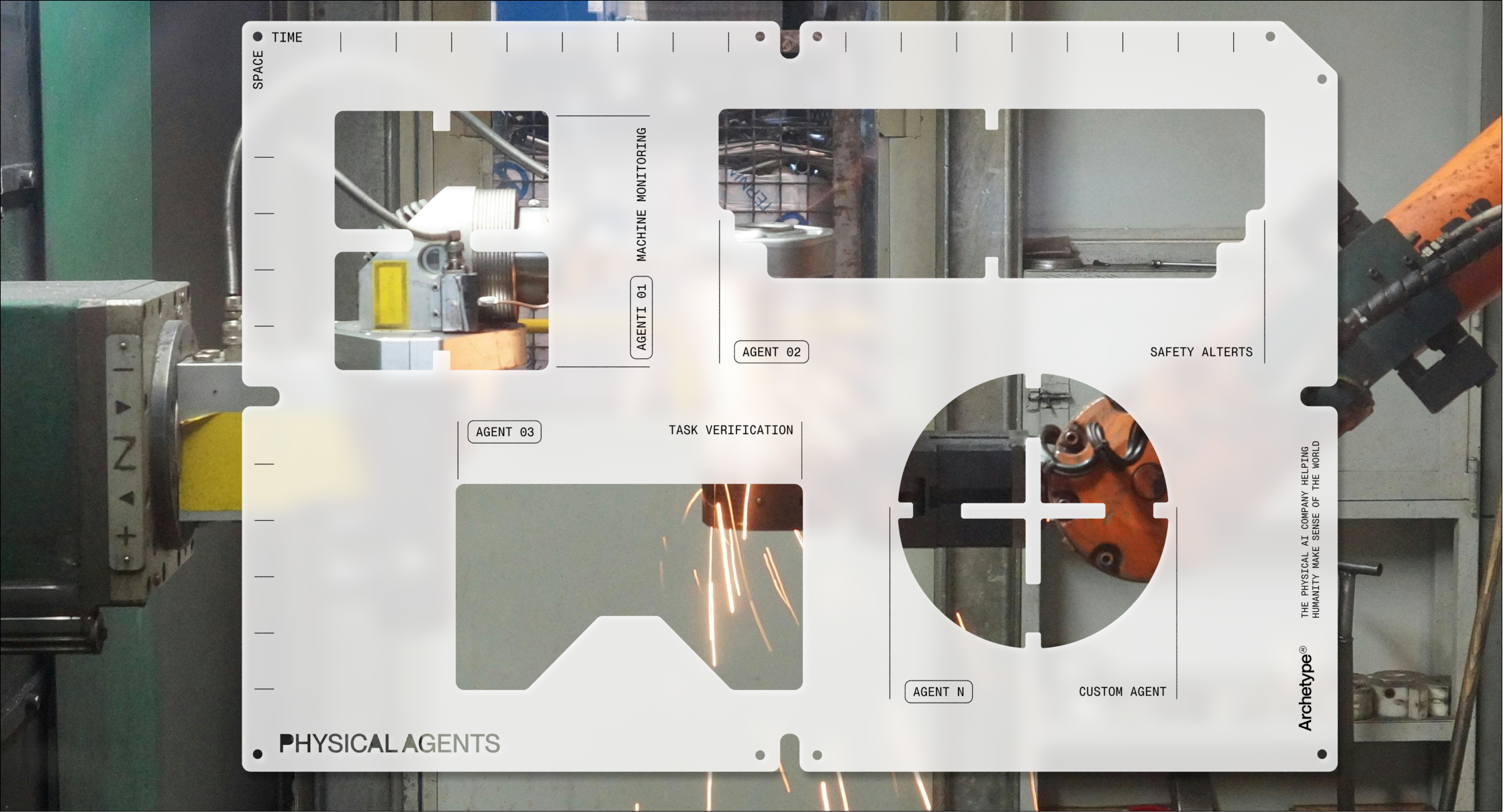

Real-World Intelligence, Powered by Physical Agents

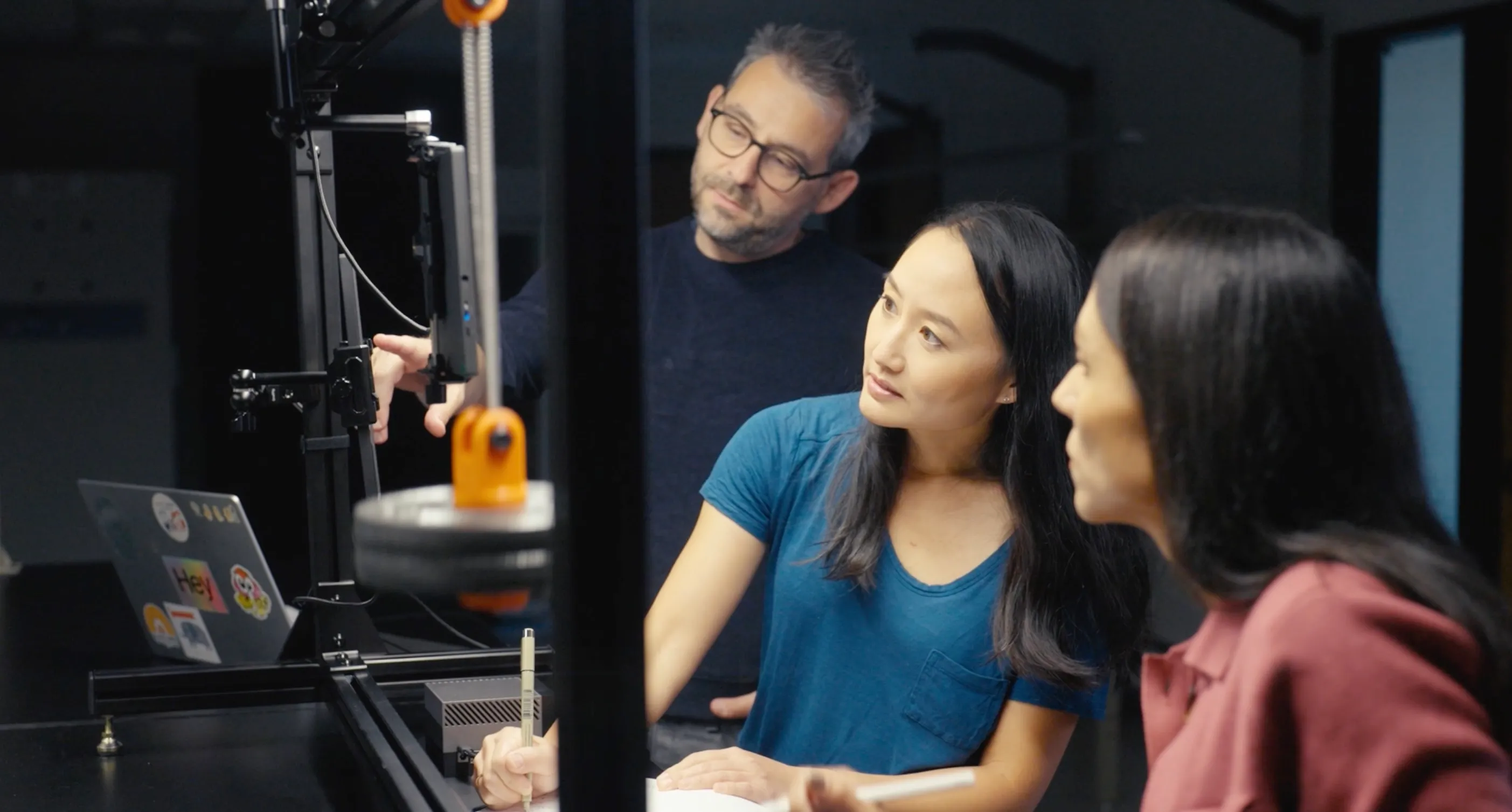

Introducing Physical Agents — deployable AI applications that interpret multimodal sensor data and deliver real-time intelligence for the physical world. Powered by Newton, our foundation model built for the real world.

_click to read the post

All articles

Nov 26, 2025

Archetype Platform Is Now Available on AWS Marketplace

The Archetype platform is now available on AWS Marketplace, making it easier for developers to build and deploy Physical Agents.

Nov 20, 2025

Archetype AI Raises $35M Series A

We're announcing our $35M Series A to advance Newton, our Physical AI foundation model, and launch a platform enabling organizations to deploy Physical Agents that understand and act within real-world environments.

Nov 20, 2025

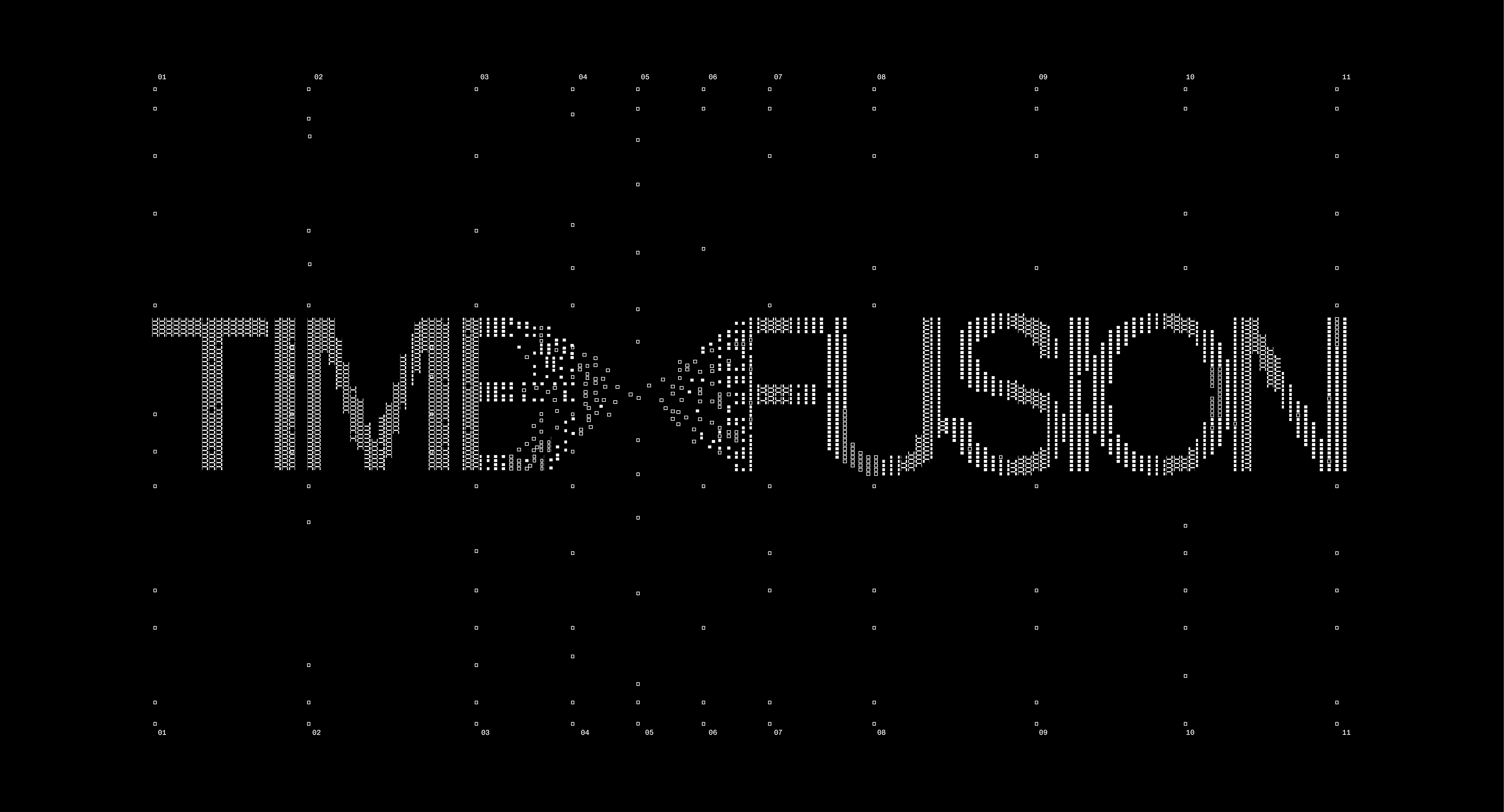

TimeFusion: Natural-Language Intelligence for Your Sensors

Introducing Newton TimeFusion, Archetype's 2.0B-parameter multimodal model that unifies human language and time-series sensor data using Universal Tokens. This breakthrough creates a "Physical AI" layer, allowing users to have natural conversations with sensors and machines for actionable insights, prediction, and control.

Jun 25, 2025

How Newton AI Is Transforming Manufacturing

Despite billions invested in industrial monitoring systems, the majority of manufacturing sensor data goes unused. Newton AI changes this by providing a foundation model that fuses data from different types of sensors to deliver actionable insights for safety, quality, and maintenance — without requiring months of custom development.

Apr 29, 2025

How The 184-Year-Old KAJIMA Is Embracing an AI Future in Construction

Through an innovative project involving a major canal reconstruction in Niigata, KAJIMA demonstrates how Physical AI can process terabytes of sensor data to improve efficiency, reduce waste, and preserve expert knowledge in the construction industry.

Mar 27, 2025

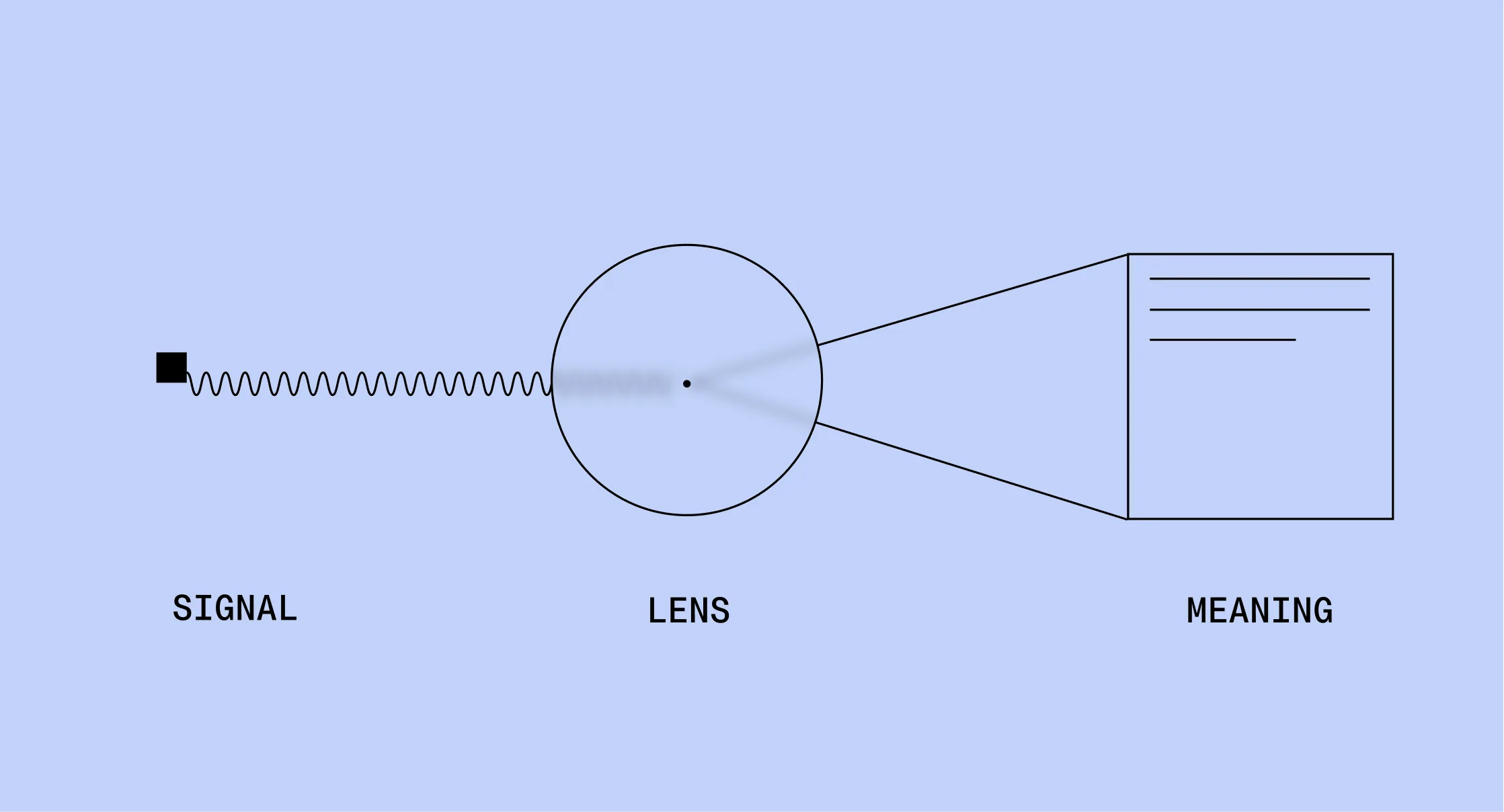

Unlock the Power of Physical AI with Lenses

As AI moves beyond screens into the physical world, we need new ways to understand and interact with it. Instead of chatbots or agents focused on automation, we're introducing Lenses — AI applications that continuously transform sensor data into actionable insights.

Feb 13, 2025

Building Physical AI for People, Not Robots

Physical AI is here, but the vision behind it is often reduced to robotics powered by multimodal AI models, and we believe this view is too narrow. In this article, we will outline an approach that focuses on enabling AI to independently uncover the underlying principles of the physical world and augment human intelligence rather than replacing humans.

Dec 23, 2024

2024: The Year of Physical AI

This was the year Physical AI moved from concept to reality, as the importance of using modern AI foundation models to solve real-world problems entered the mainstream. We are proud to be at the forefront of this movement. Let’s look at the year’s most defining moments from Archetype AI.

Dec 12, 2024

Connecting the Dots: How AI Can Make Sense of the Real World

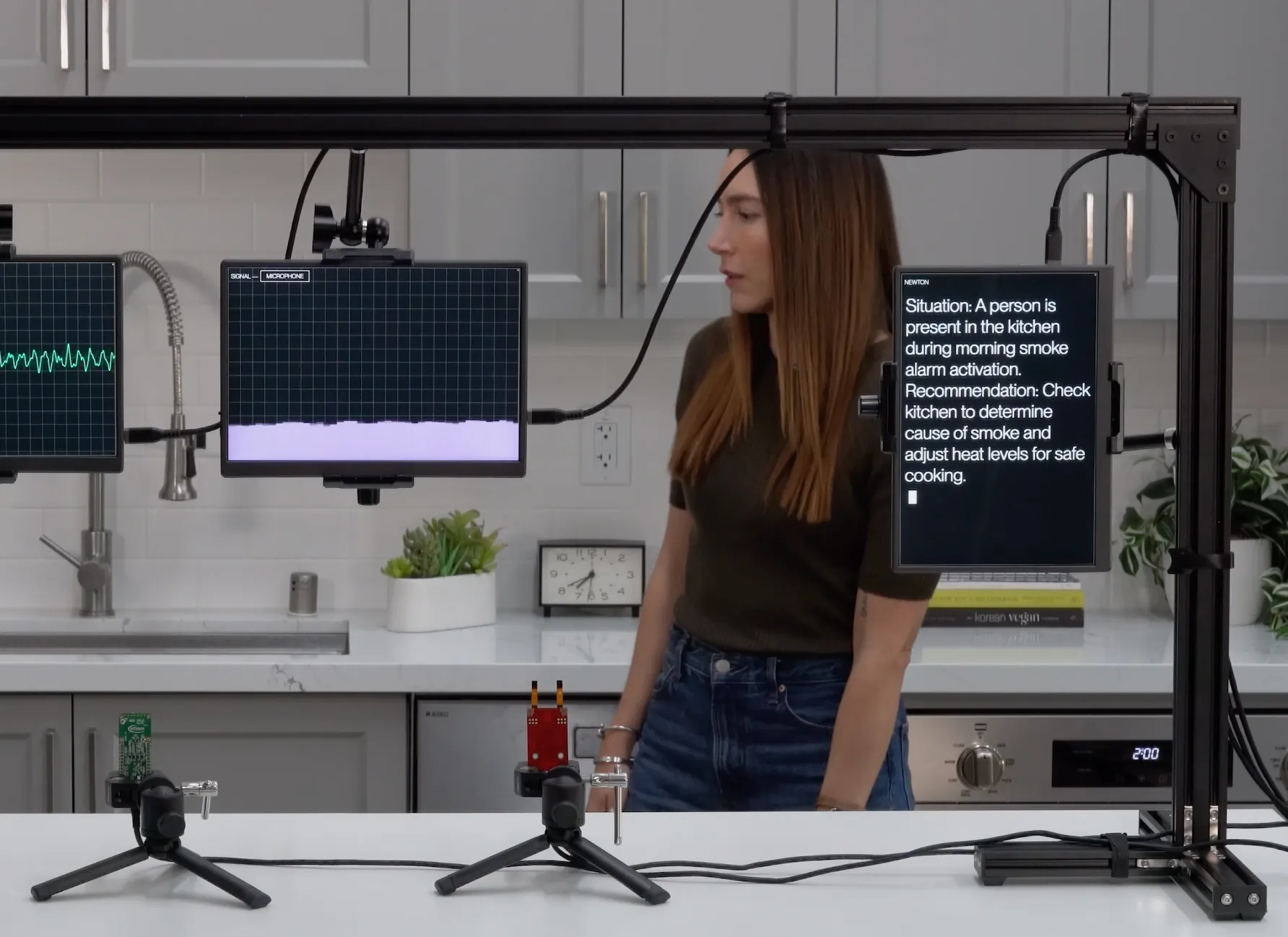

Humans can instantly connect scattered signals—a child on a bike means school drop-off; breaking glass at night signals trouble. Despite billions of sensors, smart devices haven’t matched this basic human skill. Archetype AI’s Newton combines simple sensor data with contextual awareness to understand events in the physical world, just like humans do. Learn how it’s transforming electronics, manufacturing, and automotive experiences.

Oct 30, 2024

Unveiling the Future of Physical AI at TEDAI San Francisco

Last week, we made waves at TEDAI San Francisco — on Day 1, we premiered our feature video, "A Phenomenological AI Foundation Model for Physical Signals” and on Day 2, our co-founder, CEO, and CTO, Ivan Poupyrev, took the stage for a panel discussion on the future of embodied AI.

Oct 24, 2024

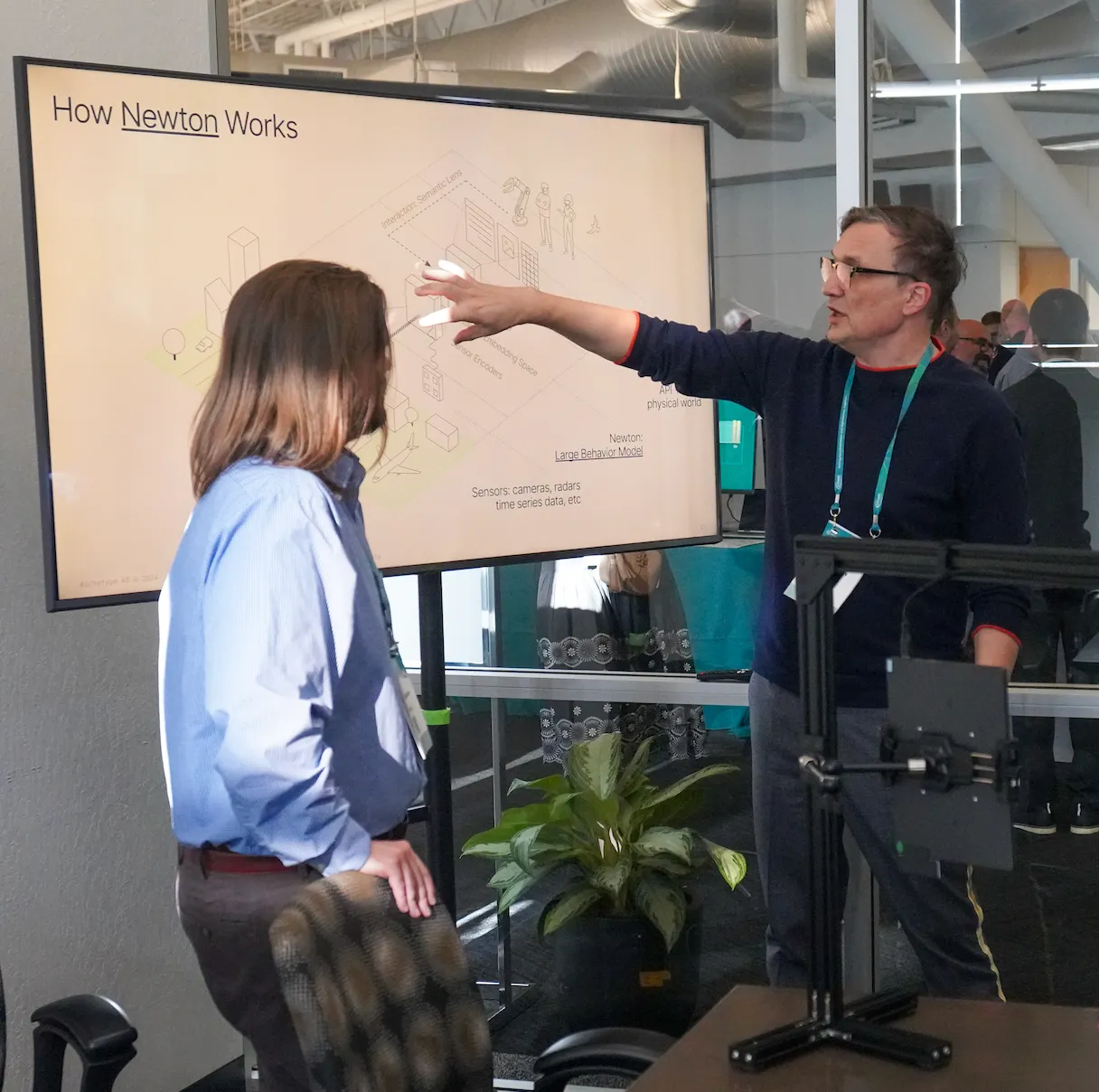

Archetype AI and Infineon Demonstrate a Foundation Model for Sensor Fusion

On October 17, 2024, Infineon and Archetype AI introduced the first-ever foundation model capable of understanding real time sensor data. They presented a demo at OktoberTech™ Silicon Valley, Infineon's annual technology forum. Read on to learn more about the event and our approach to building a large behavior model that can reason about events in the physical world.

Oct 17, 2024

Can AI Learn Physics from Sensor Data?

We are excited to share a milestone in our journey toward developing a physical AI foundation model. In a recent paper by the Archetype AI team, "A Phenomenological AI Foundation Model for Physical Signals," we demonstrate how an AI foundation model can effectively encode and predict physical behaviors and processes it has never encountered before, without being explicitly taught underlying physical principles. Read on to explore our key findings.

Sep 25, 2024

Power of Metaphors in Human-AI Interaction

Currently, digital companions are the dominant metaphor for understanding AI systems. However, as the field of generative AI continues to evolve, it's crucial to examine how we frame and comprehend these technologies—it will influence how we develop, interact with, and regulate AI. In this blog post, we'll explore different metaphors used in AI products and discuss how they shape our mental models of AI systems.

Sep 5, 2024

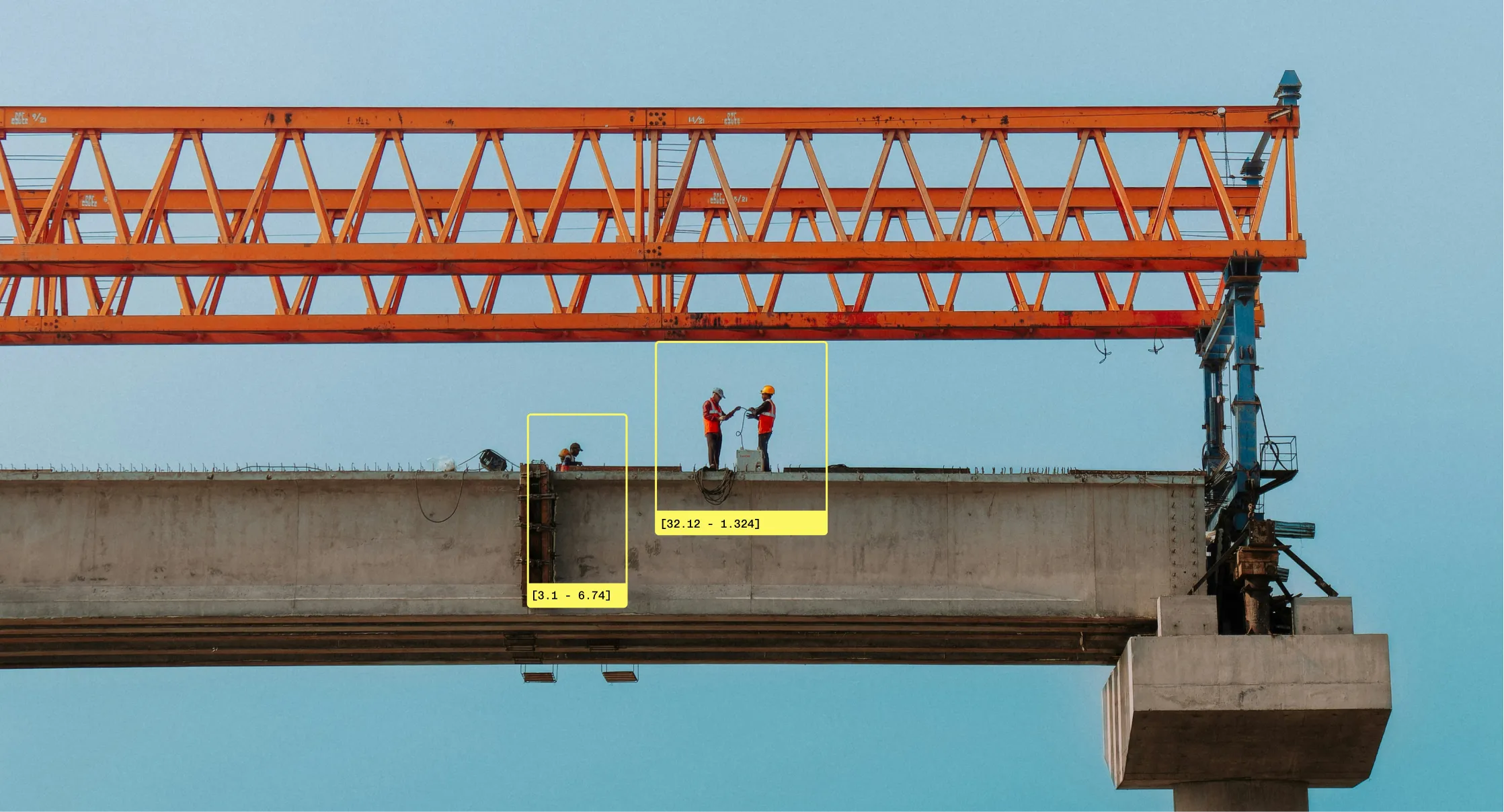

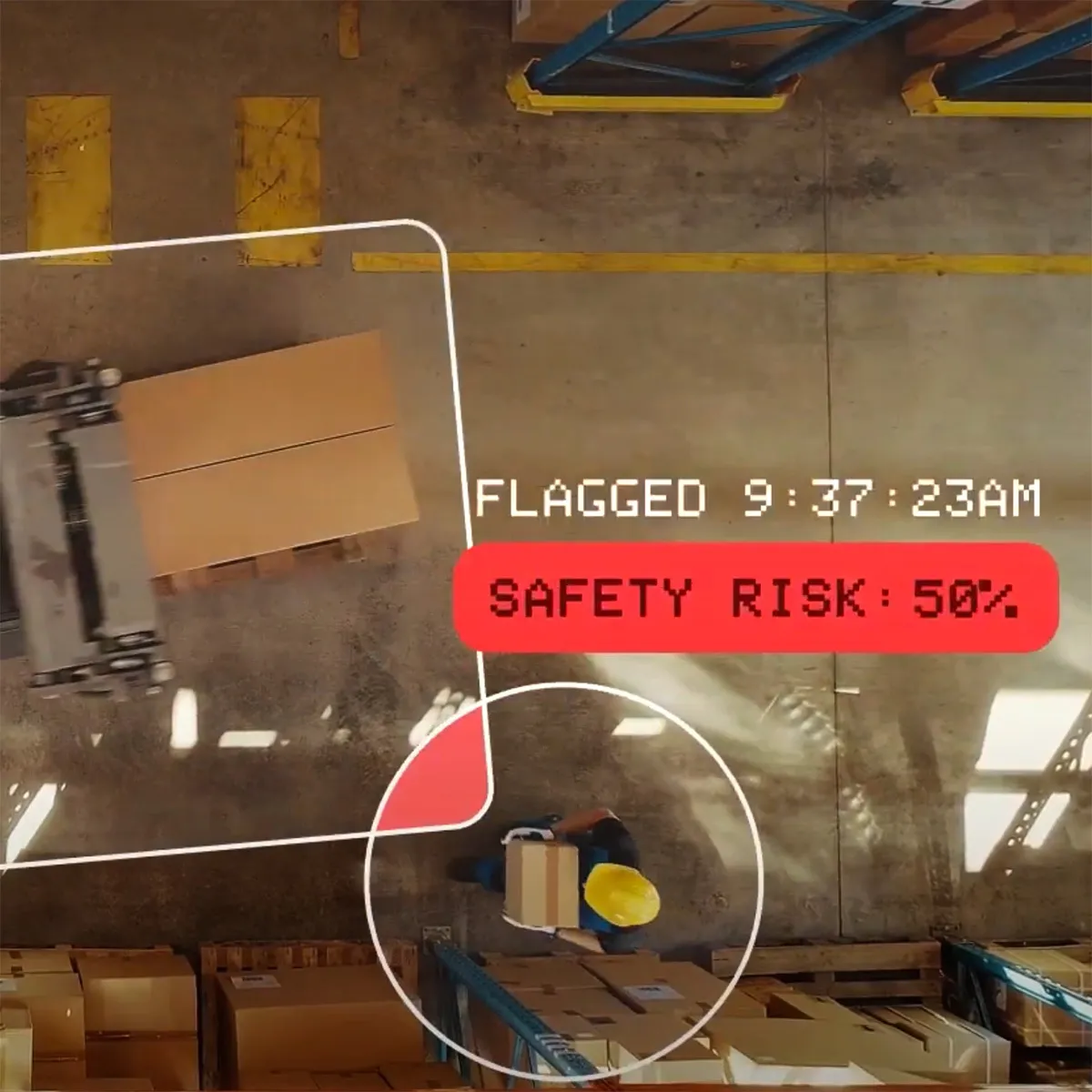

Bringing AI to Sensor Data: Newton On-Prem and Real-Time

Implementing AI in industrial settings comes with significant challenges like ensuring employee safety, estimating productivity, and monitoring hazards—all requiring real-time processing. However, sending sensor data to the cloud for analysis introduces latency and security concerns, driving up costs. The solution? Eliminate the cloud. With Archetype AI’s Newton foundation model, AI can run on local machines using a single off-the-shelf GPU, delivering low latency, high security, and reduced costs in environments like manufacturing, logistics, transportation, and construction.

Aug 26, 2024

Trillion Sensor Economy: How Physical AI Unlocks Real World Data

In a world where devices constantly collect and transmit data, many organizations struggle to harness its potential. At Archetype AI, we believe AI is the key to transforming this raw data into actionable insights. Join us as we explore how the convergence of sensors and AI can help us better understand the world around us.

Aug 13, 2024

How Soarchain Is Making Roads Safer With Physical AI

Imagine a world where your car responds not just to what you see but to what every vehicle, traffic light, and smartphone detects. Soarchain is making this a reality by combining their decentralized platform with Archetype AI’s developer API, Newton. Together, we will give drivers and autonomous vehicles a rich real time understanding of what’s happening on the roads around them.

May 21, 2024

What Is Physical AI? – Part 2

At Archetype, we want to use AI to solve real world problems by empowering organizations to build for their own use cases. We aren’t building verticalized solutions –instead, we want to give engineers, developers, and companies the AI tools and platform they need to create their own solutions in the physical world.

Apr 8, 2024

We were featured in WIRED by Steven Levy

The renowned tech journalist Steven Levy featured Archetype AI in an exclusive article on WIRED. In his piece, Levy explores how our advanced AI models serve as a crucial translation layer between humans and complex sensors, enabling seamless interactions with houses, cars, factories, and more.

Apr 5, 2024

Introducing Archetype AI – Understand the Real World, in Real Time

Imagine a world where technology could help us make sense of the world's hidden patterns, understand the root causes of problems, and identify solutions.

At Archetype AI, we believe such a world is possible. We’re unveiling a new form of artificial intelligence that takes us a step closer to this reality.

Nov 1, 2023

What Is Physical AI? – Part 1

We’re building the first AI foundation model that learns about the physical world directly from sensor data, with the goal of helping humanity understand the complex behavior patterns of the world around us all.

Oct 24, 2023

Interacting With AI in the Physical World

This is Part 2 of our "What is Physical AI?" blog series. To gain a deeper understanding of physical AI and Newton, our foundation model for the physical world, check Part 1 of the series.

Oct 24, 2023

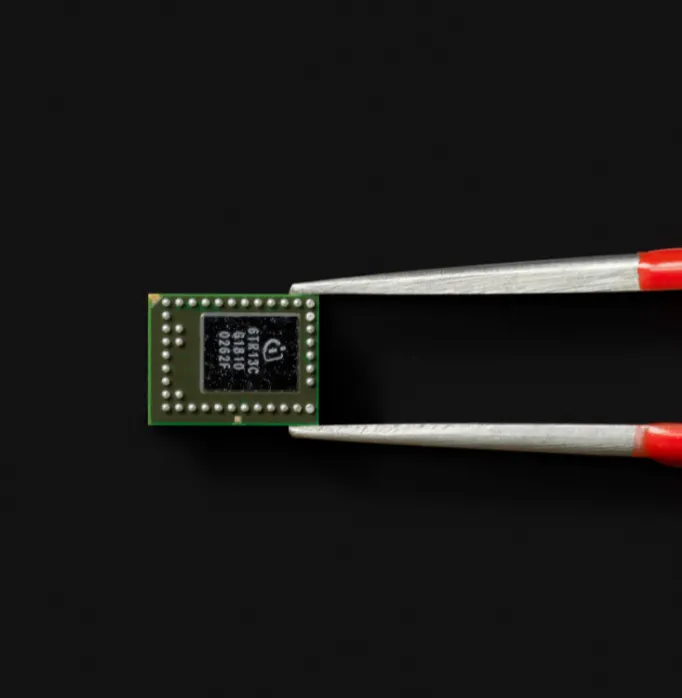

Infineon and Archetype AI Are Unlocking the Future of AI-Powered Sensors

Infineon will be the first company to utilize the Newton AI developer platform, offering device makers a combined package of sensor hardware and sensor AI.