The physical world often feels complex and chaotic, yet humans have learned to discover the laws of nature that govern it—such as mechanics, thermodynamics, and more. We have achieved this through repeated observations and precise measurements of different physical phenomena, e.g., the motion of objects or temperature of gases. But what if AI could also uncover the governing laws of the physical world by analyzing a variety of sensor data on its own, without human guidance? What if AI could help us characterize highly complex physical system behaviors that we are unable to formally describe using equations, such as the behaviors of electrical grids, industrial machinery, or even human-computer interaction?

We are excited to share a milestone in our journey toward developing a physical AI foundation model. In a recent paper by the Archetype AI team, A Phenomenological AI Foundation Model for Physical Signals, we demonstrate how an AI foundation model can effectively encode and predict physical behaviors and processes it has never encountered before, without being explicitly taught underlying physical principles. Read on to explore our key findings.

What it means for AI to understand the real world

We often assume that to understand the physical world, we need to learn the laws of physics first before applying them to tasks at hand. Naturally, we might think that AI also needs to be taught these same laws—such as the conservation of energy—so it can process data from the physical world through the lens of these laws. This approach often relies on the introduction of inductive biases, which are assumptions representing constraints and prior knowledge as mathematical statements.

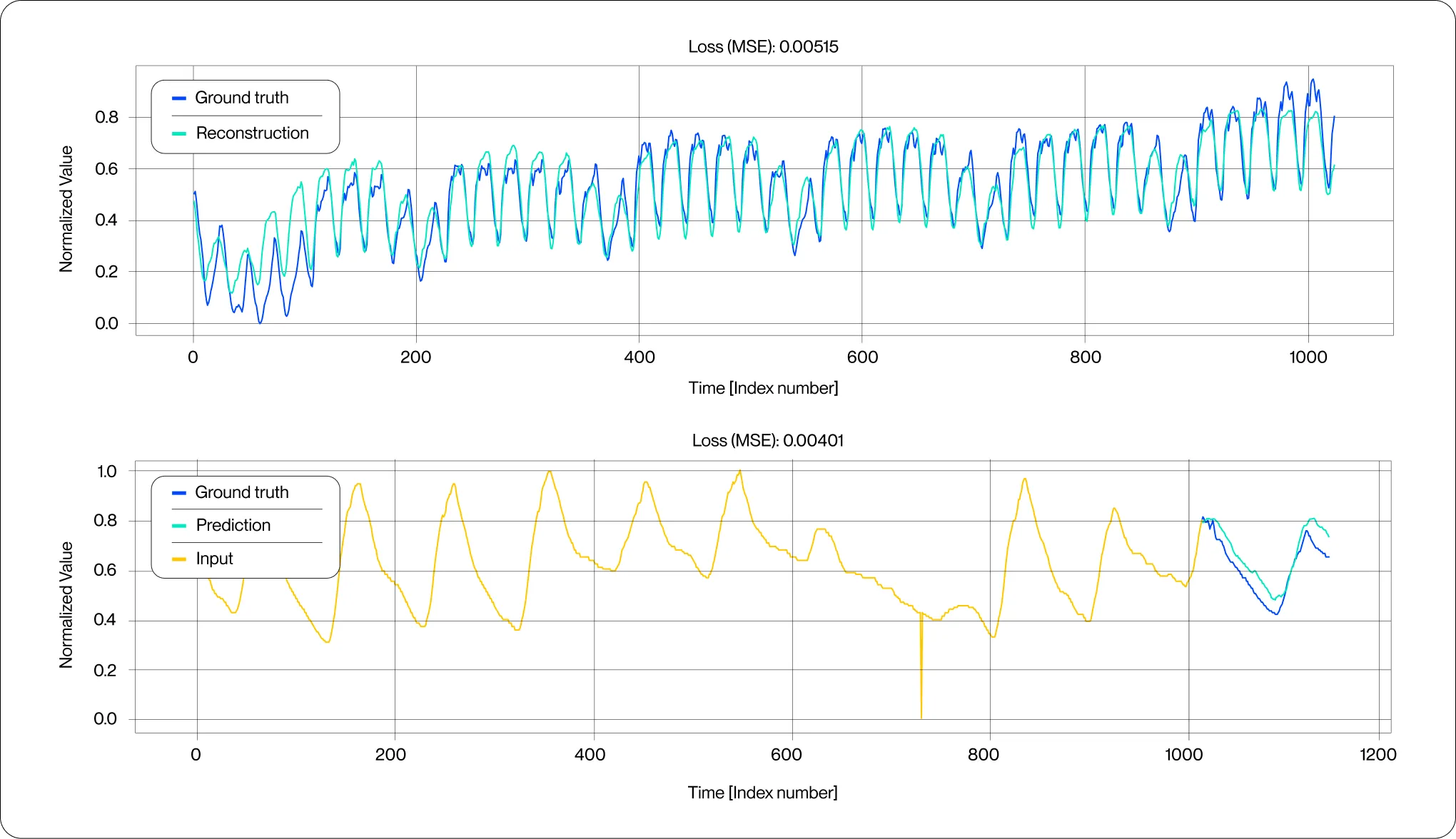

Newton, trained on millions of sensor measurements, predicts unfamiliar physical systems, like the spring-mass system shown here. Its zero-shot capabilities handle both complex and chaotic behaviors across classic and real-world systems.

However, the problem with this approach is that it leads to highly specialized AI models. For example, if we train a model to analyze fluid motion using the Navier–Stokes equations, it wouldn't be able to interpret radar Doppler images, which involve very different physical principles. Moreover, complex systems — like the electrical grid or automated industrial machines — can't be easily described using just a few physical laws. Defining such systems with simple equations is often extremely challenging.

But what if, instead of teaching AI the laws of the physical world, we let it discover them on its own, directly from data? This is, in fact, how humans uncovered many of the fundamental laws of nature. Think about how Johannes Kepler figured out planetary motion or how Georg Ohm derived the laws of electricity. They didn't know the laws beforehand — they observed, measured, and analyzed the behavior of planets and electricity to reveal hidden, recurring patterns, which we now call the laws of nature. These patterns not only explained their current observations but also enabled them to predict future behaviors, such as the movement of planets or the flow of electrical currents. Can AI do the same?

The butterfly effect in the age of AI

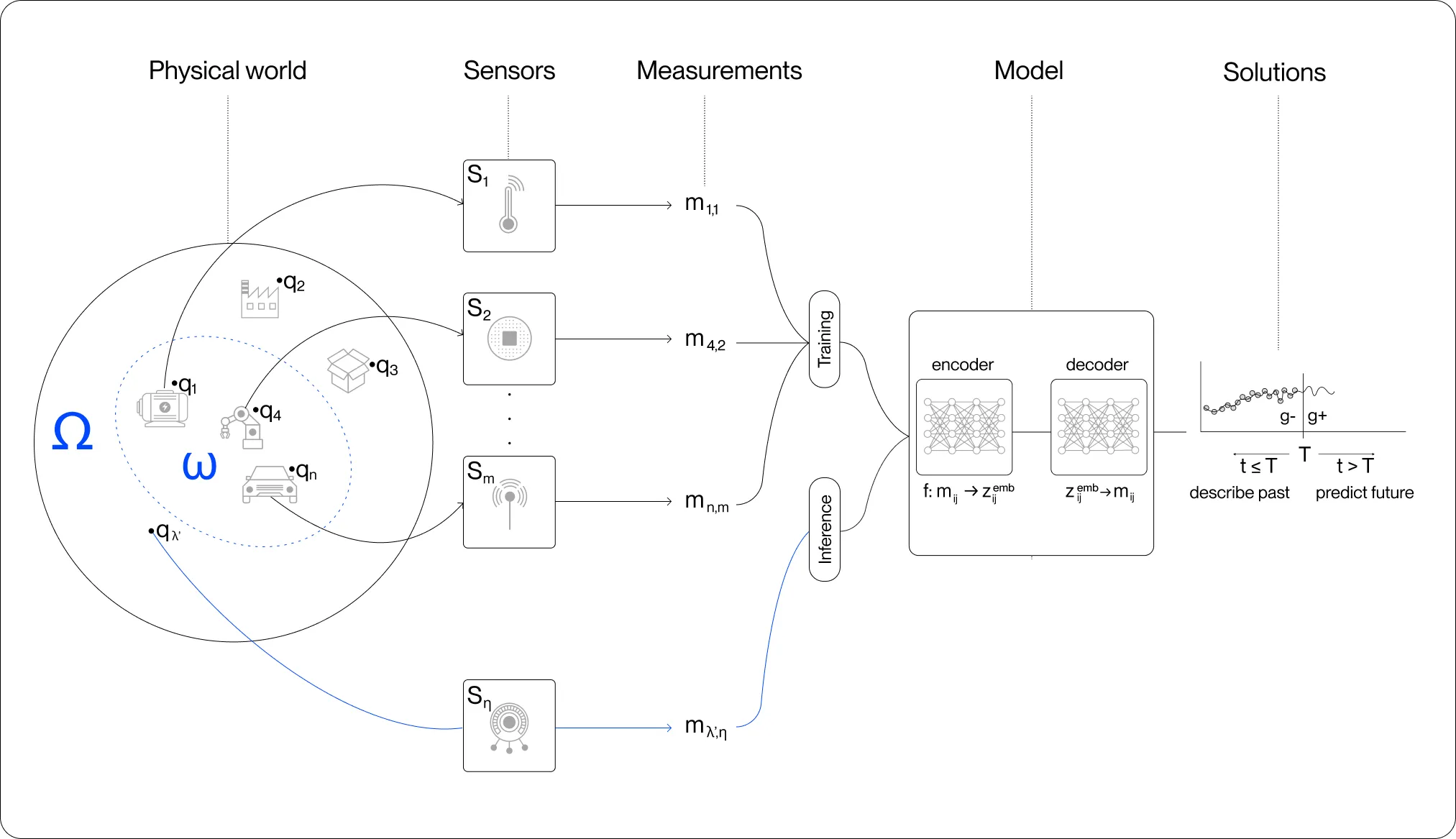

The challenge of understanding the physical world, whether for humans or AI, is that we cannot experience or learn about it directly — we can only observe it indirectly through sensors. These sensors might be our natural biological ones, like our eyes and ears, or the countless artificial sensors humans have invented to measure everything from acceleration to gas concentration. However, sensors always affect the outcome, distorting or obscuring the "true" physical behavior, making it harder to uncover the underlying laws that govern the physical world.

The true measure of whether an AI model has genuinely learned the governing behaviors of the physical world, rather than just mimicking sensor data, is its ability to generalize beyond the data it was trained on. For example, if you train a model on data from the electric motor in your car, could it then predict noise pollution in San Francisco? That means that the model should be able to accurately describe physical systems it has never encountered before, even when those systems are measured by sensors unfamiliar to the model.

In other words, if you trained a model on data from a butterfly flapping its wings, could it forecast hurricane dynamics on the other side of the world?

Teaching Newton to decode the real world

Recent advancements in generative AI techniques — such as ChatGPT for natural language processing or image models like Vision Transformers and CLIP — show that this level of generalization is possible. But the question remains: can we apply these same principles to data from the physical world? Can we create AI models that, using only raw measurements and without any prior knowledge of a system's inner workings, can predict the future behavior of any physical system?

To address this challenge, we have pre-trained our physical AI model, Newton, on 0.59 billion samples from open-source datasets covering a wide range of physical behaviors, from electrical currents and river fluid flows to optical sensors. Using a transformer-based deep neural network, Newton encodes all this raw, noisy sensor data and attempts to make sense of it by uncovering hidden patterns and statistical distributions in the universal latent space representing all these measurements.

Next, several lightweight, application-specific neural network decoders were trained to make use of the condensed insights produced by the encoder. These decoders are trained to perform real world tasks, such as predicting future outcomes based on sensor data or reconstructing past events. In practical applications, Newton can take in real-time data from sensors measuring physical behaviors, or it can work with prerecorded sensor measurements to make accurate predictions.

Learning to predict the future of physical systems

Before moving on to complex real world applications, we tested Newton using simple physics experiments that most people are familiar with from school, such as basic mechanical oscillation and thermodynamics experiments.

In both cases, the Newton model received real-time data from sensors and was able to accurately predict the behavior of the physical systems simply by observing the concurrent sensor data. What's remarkable is that Newton had not been specifically trained to understand these experiments – it was encountering them for the first time and was still able to predict outcomes even for chaotic and complex behaviors. This ability of an AI model to accurately predict data it has not encountered previously is often referred to as zero-shot forecasting.

While these classic experiments were exciting, real world systems are far more complex and harder to describe. We then tested Newton on predicting the behavior of such systems as city electrical demand, daily temperature, and temperature in electrical transformers, to name a few. Figure 2 demonstrates that Newton was able to accurately zero-shot forecast the behavior of these complex systems with no additional training data for systems that are challenging even for humans to model.

What’s exciting is that zero-shot forecasting consistently outperforms even when Newton is specifically trained on only the target dataset. In other words, Newton, trained on a vast amount of physical sensor data from all over the world, knows more about the temperature of oil in transformers than when we train Newton specifically on transformer oil data. This suggests that foundation models like Newton have powerful generalization capabilities, enabling them to understand physical behaviors far beyond the specific data they were originally trained on.

Practical implications

Physical AI models that can independently understand the physical world from sensor data have significant practical implications:

One model – many use cases: One of the most important implications is that we no longer need to train a separate AI model for every specific physical application. We can apply a single model like Newton across various domains and use cases, dramatically speeding up and simplifying the deployment of AI to solve real world problems that matter.

Less training data for new use cases: A pre-trained physical AI foundation model that can accurately analyze sensor data with minimal additional training becomes invaluable in situations where collecting large datasets is difficult or impossible. This could lead to sensor-agnostic physical AI platforms that can work with specialized sensors straight out of the box, without extensive retraining.

Less computing power for new use cases: The architecture we propose – training a foundational encoder with lightweight, application-specific decoders – enables the model to be easily adapted for different applications without major modifications to its core structure. This makes it faster and more efficient to build new use cases, reducing both computational resources and costs.

Autonomous learning from observations: Newton's self-supervised training suggests it can learn physical behaviors directly from observational data. This opens the door to highly autonomous systems that can adapt to new environments or requirements without needing explicit human intervention, such as manual data labeling or model fine-tuning.

There are many more profound implications and exciting possibilities with Newton, from building automotive monitoring systems to self-adapting robots, or even driving scientific discovery by uncovering new physical laws. Indeed, if AI can discern the laws of nature through observation alone, what other undiscovered patterns could it reveal about the universe?

Interested to learn more? Read "A Phenomenological AI Foundation Model for Physical Signals" here.